The Importance of Software Testing

17 Feb , 2021

A Deadly Bug

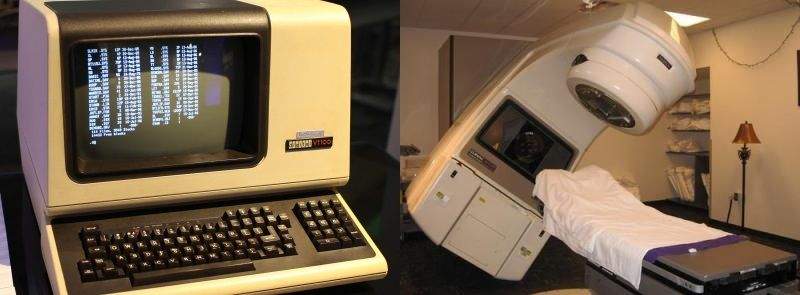

On March 21st, 1986, a man walked into the East Texas Cancer Center (ETCC) to receive radiation therapy on his upper back tumor. It was his ninth visit, so everything felt pretty ordinary. He entered the treatment room and laid down underneath a big, bulky Therac-25 machine (pictured above). The operator, in the control room next door, began the operation. MIT engineer Nancy Leveson recounted the story in a postliminary report:

She entered the patient’s prescription data quickly, then noticed that she had typed “x” (for X-ray) when she had intended “e” (for electron) mode. This was a common mistake as most of the treatments involved X-rays, and she had gotten used to typing this. The mistake was easy to fix[.]

Once the typo had been fixed, the machine was ready to go.

She hit the one-key command, ‘B’ for beam on, to begin the treatment. After a moment, the machine shut down and the console displayed the message MALFUNCTION 54[.]

After hundreds of treatments, the operator had gotten used to little glitches and quirks that occasionally caused the machine to delay or freeze up. The error indicated the dose delivered was too low, so she did what she’d done plenty of times before: hit the ‘P’ key to proceed with the treatment.

She had no way of knowing that, on the other side of the wall, her patient was being overwhelmed with deadly levels of radiation.

Why the Therac Failed

The legend of the Therac-25 radiation machine is complicated, and tragic. And it’s resonant even three and a half decades later, because of what it reveals about software testing.

Yes, software testing. Neither the radiation therapist at ETCC nor her patient had any notion that their extremely bad day was the result of actions taken by business executives years prior, hundreds of miles away, who’d failed to realize how necessary it was to vet their machine code before releasing it to production.

Their failures are lessons for us today.

Lesson #1: Only Testing Accounts for Real-Life Use Cases

Software testing (quality control, quality assurance, penetration testing and the like) may be the least sexy line of work in the entire tech industry. But deliberate, controlled and thorough code analysis is as critical to the success of a software product as the actual writing of the code.

For one thing, because software testers don’t actually write the code they analyze, they’re able to approach it with fresh eyes. So you end up with a better result than what developers could come up with on their own.

More importantly, software testers go beyond what developers can do with automated bug-detecting tools by adding a human touch to the process. They run code under different conditions, on various devices (if applicable), following their instincts to spot errors. In short, they use the software in all the ways an end user might, to predict all the issues that would otherwise have become known only after the product has shipped.

According to court testimony, AECL did only “minimal” testing of the Therac-25 source code, under simulated conditions. In that light, we can see why they failed to identify Malfunction 54.

- It was a rare bug. By March 21st, 1986, ETCC had already treated over 500 patients with their Therac-25, problem-free.

- The radiation operator made characteristically human errors–a typo, and ignoring an error message on multiple occasions.

- The problem was entirely undetectable at the system level. Without a human patient to get up and bang on the door of the control room, the operator wouldn’t have ever known there was a problem. (It stands to reason that, even if AECL had come across Malfunction 54 in testing, the “simulated” testing environment wouldn’t have revealed anything wrong.)

Lesson #2: Even Good, Battle-Tested Code Can Fail

It would be easy to write off AECL as negligent. While negligence certainly contributed to the Therac-25’s failures, it doesn’t tell the whole story, because AECL had a perfectly logical reason to skimp on software testing: they were using legacy code.

The Therac-25 was merely a newer model of the Therac-20, itself a newer iteration of the Therac-6. If the code had worked the first two times, why would it pose any issues the third time around?

Unfortunately, even longstanding, battle-tested code can fail when placed under unique conditions. This is true of older software running on newer devices and operating systems. It’s true of popular open-source packages which, on their own, may be perfectly fine, but bundled together with other components can cause major performance issues.

For the Therac-25, the problem was even simpler. The 6 and 20 had used machine interlocks to prevent the kinds of malfunctions that might cause radiation overdoses. The Therac-25 got rid of those mechanical failsafes, reassigning the job of safety to the software itself. But the software couldn’t make up the difference.

The software did not contain self-checks or other error-detection and error-handling features [. . .] The Therac-25 software “lied” to the operators, and the machine itself was not capable of detecting that a massive overdose had occurred.

Lesson #3: Testing is a Hedge Against Overconfidence

A common mistake in engineering, in this case and in many others, is to put too much confidence in software. There seems to be a feeling among non software professionals that software will not or cannot fail, which leads to complacency and overreliance on computer functions.

Business executives without technical backgrounds sometimes misunderstand the limitations of the software they’re selling. And companies that believe strongly in their products and employees occasionally extend that faith too far. Even developers can fall into the trap of overconfidence. After all, programmers tend to be highly intelligent people, and that can bleed into hubris (one well-documented phenomenon is that of the developer who interprets their bug report as a personal attack).

Atomic Energy of Canada Limited (AECL) had been building radiation machines for years. They were perfectly capable, and experienced, and backed by the Canadian government. Perhaps it was because they were so qualified, so experienced, that they placed too much trust in their code.

They should have been more concerned. According to reports, the entirety of Therac-25’s code was written by just one person, and was never subjected to independent review.

Lesson #4: Testing is Cost-Effective

Doing quality control and quality assurance can be tedious, but it is just about guaranteed to save time and money in the long run. It means fewer angry customers calling into customer service and technical support. It means that fewer engineering hours have to be spent on patches post-release. And, critically, it minimizes (though never fully eliminates) the risk of catastrophic failure.

AECL may have saved a bit of time and money cutting corners on their Therac-25 R&D. But the litigation they faced in the years that followed more than made up the difference.

Consequences of Bad Software

Most software bugs will cause only minor annoyances for end users. Sometimes, they can seriously slow down or completely freeze an application. Occasionally, they can cause much greater harm. For example, a program with security vulnerabilities can become the launchpoint for a breach of user data, company data, or attacks against other companies along a supply chain.

In extremely rare cases, the consequences of untested software can be catastrophic.

On March 21st, 1986, while ETCC’s radiation therapist sat calmly at her monitor, disaster was unfolding in the treatment room.

After the first attempt to treat him, the patient said that he felt as if he had received an electric shock or that someone had poured hot coffee on his back [. . .] Since this was his ninth treatment, he knew that this was not normal.

At the moment the operator saw the first error message, her patient was brainstorming how to get out of a bad situation. He then made a fateful decision.

He began to get up from the treatment table to go for help. It was at this moment that the operator hit the ‘P’ key to proceed with the treatment. The patient said that he felt like his arm was being shocked by electricity and that his hand was leaving his body.

He rushed over and began pounding on the door. The radiation therapist–who, to this point, sensed nothing wrong–was shocked.

Immediately, a physician was rushed in to examine the patient. There was a redness in the areas hit by the laser, but nothing more. They didn’t realize, even at this point, that the man had received a massive overdose of rads.

Over the weeks following the accident, the patient continued to have pain in his neck and shoulder. He lost the function of his left arm and had periodic bouts of nausea and vomiting. He was eventually hospitalized [. . .] He died from complications of the overdose five months after the accident.

All because of a bug.

==============

Do you need extra help managing evolving trends, technologies and operational models or just need to maximize your system return on investment?

See what types of services your business would benefit from and get a FREE consultation with our team and find out how we can help you!